A Beginner's Guide to Data Validation and Cleaning in Data Analysis

What is Data Validation?

Data Validation is the process of ensuring that the data you’re working with meets the necessary standards of quality. It helps to ensure that your data is both correct and useful by checking for:

- Accuracy: Data should be free from errors.

- Consistency: Data should follow the same structure or format across the dataset.

- Completeness: Missing or incomplete data should be identified.

- Validity: Data should conform to the expected format or range.

Common Techniques for Data Validation

- Range Checking: Ensures numerical values fall within a defined range. For example, ensuring ages in a dataset are between 0 and 120.

- Type Checking: Ensures that the data types are consistent (e.g., numerical data should not contain letters).

- Constraint Checking: Validates that data follows specific rules. For instance, dates must be in the format "YYYY-MM-DD."

What is Data Cleaning?

After validating your data, the next step is to clean it. Data cleaning involves correcting or removing inaccurate, incomplete, or irrelevant data from your dataset. This is important to prevent errors in your analysis, which could lead to faulty conclusions.

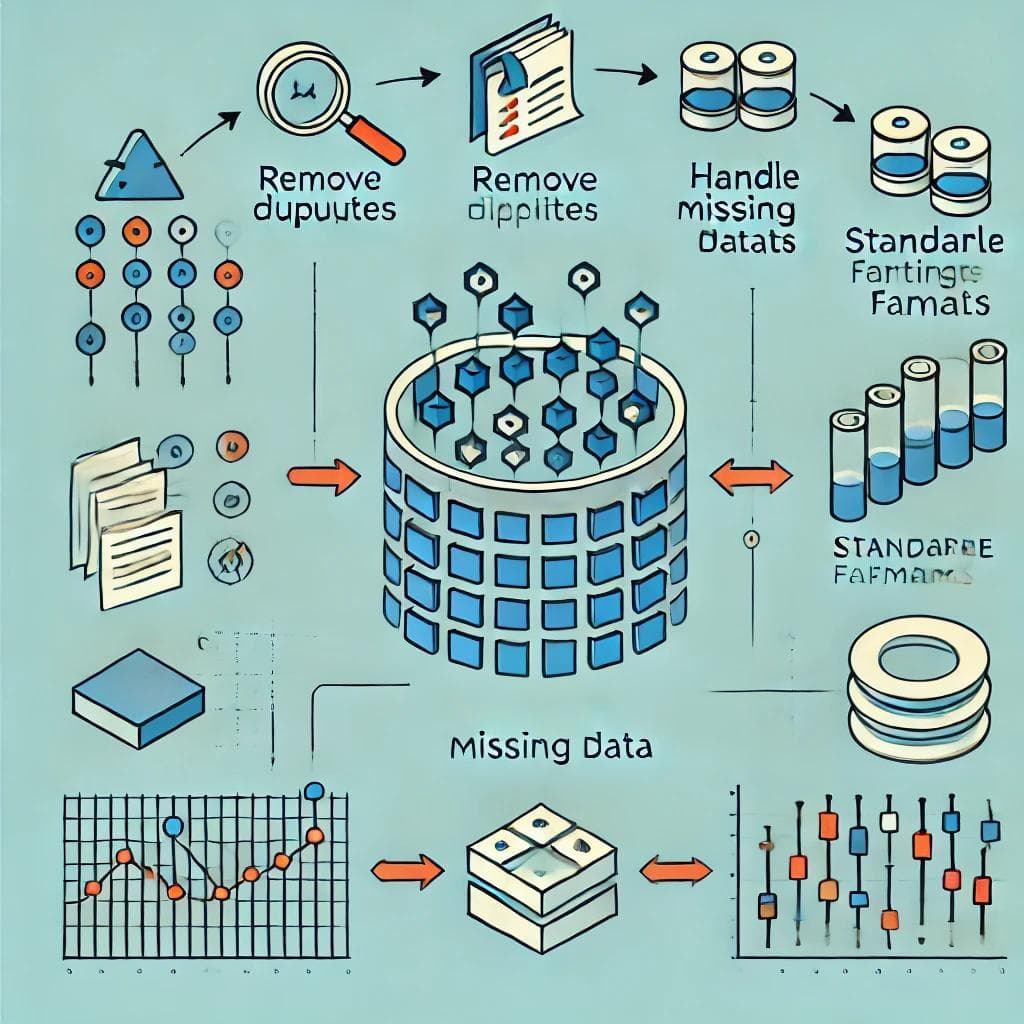

Steps in Data Cleaning

- Handling Missing Data:

- Replace missing values with the mean, median, or a custom value.

- Use interpolation or regression techniques for missing values.

- In some cases, it’s better to remove rows or columns with too much missing data.

- Removing Duplicates:

- Duplicate data can distort your analysis. Remove any repeated rows to maintain data integrity.

- Standardizing Data Formats:

- Ensure that your dataset uses consistent formats (e.g., dates, text capitalization).

- Handling Outliers:

- Outliers can skew results, especially in statistical analysis. You can either remove outliers or investigate if they hold any significance.

- Fixing Inconsistencies:

- Ensure consistent naming conventions (e.g., "New York" vs. "NYC") and correct incorrect data points.

Tools for Data Cleaning

- Spreadsheet Software: Excel or Google Sheets for smaller datasets.

- Python: Libraries like

pandasandnumpyare powerful for data manipulation and cleaning. - SQL: Useful for cleaning and validating data in databases.

- Data Wrangling Tools: Tools like OpenRefine help with larger datasets and more complex cleaning tasks.

Why is Data Validation and Cleaning Important?

- Improved Accuracy: Clean data leads to more reliable and accurate insights.

- Better Decision Making: Clean and validated data leads to better-informed business decisions.

- Avoiding Bias: Data errors and inconsistencies can introduce bias in your analysis, leading to misleading results.

Best Practices for Data Validation and Cleaning

- Establish data quality standards: Define clear guidelines for data quality and ensure adherence to these standards.

- Automate processes: Use tools and automation techniques to streamline data validation and cleaning tasks.

- Document procedures: Clearly document data validation and cleaning processes to ensure consistency and reproducibility.

- Regularly review and update: Periodically review and update data validation and cleaning procedures to adapt to changing data requirements.

- Consider data lineage: Track the history and provenance of data to understand its origin and potential sources of error.

Conclusion

Data validation and cleaning might seem tedious, but they are the backbone of successful data analysis. As you dive deeper into the field of data analysis, the time spent ensuring clean, validated data will prove invaluable in delivering high-quality, actionable insights.

Fatimah Animashaun

www.linkedin.com/in/fatimah-animashaun